Save yourself Your Time and Money by Computerized Data Selection

Whether you only wish to scrape your data or involve some internet running jobs, Fminer may manage all forms of tasks.

Dexi.io is a famous web-based scraper and information application. It does not need you to get the software as you are able to perform your projects online. It is truly a browser-based software that allows us to save the crawled data straight to the Google Push and Box.net platforms. More over, it can move your documents to CSV and JSON models and helps the information scraping anonymously because of its proxy server.

Parsehub is one of the best and most famous internet scraping programs that obtain information without the programming or development skills. It helps equally difficult and simple data and may method websites that use JavaScript, AJAX, snacks, and redirects. Parsehub is a computer program for the Mac, Windows and Linux users. It can handle up to five crawl projects for you at a time, but the premium variation can handle more than thirty get tasks simultaneously. If your data involves the custom-built installations, this DIY instrument isn’t perfect for you.

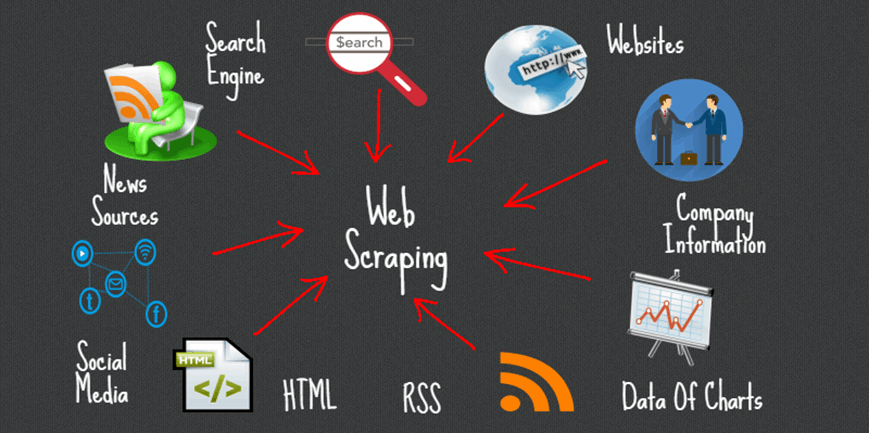

Web scraping, also known as web/internet harvesting involves the usage of a pc plan which is able to extract knowledge from still another program’s show output. The main difference between typical parsing and internet scraping is that in it, the result being crawled is intended for show to its human readers as opposed to just insight to some other program.

Thus, it is not usually record or structured for useful parsing. Usually web scraping Data enrichment will demand that binary information be dismissed – this usually suggests multimedia information or photographs – and then style the pieces that may confuse the desired purpose – the text data. This means that in actually, optical figure recognition software is an application of aesthetic internet scraper.

Generally a shift of information occurring between two applications would utilize information structures made to be prepared quickly by computers, keeping people from having to get this done boring job themselves. This frequently involves forms and standards with rigid structures which can be thus easy to parse, properly reported, compact, and function to reduce duplication and ambiguity. In reality, they are therefore “computer-based” they are typically not really readable by humans.

If individual readability is desired, then the just automatic solution to complete this kind of a knowledge move is by way of internet scraping. Initially, this was used in order to study the writing information from the computer screen of a computer. It absolutely was frequently achieved by reading the memory of the terminal via its reliable interface, or through a connection between one computer’s production slot and yet another computer’s input port.

It has therefore become a kind of way to parse the HTML text of internet pages. The internet scraping program is designed to process the writing information that’s of curiosity to the human reader, while distinguishing and removing any unrequired information, photographs, and arrangement for the web design. Nevertheless internet scraping is frequently prepared for moral causes, it is often executed to be able to swipe the information of “price” from someone else or organization’s site to be able to use it to some one else’s – or even to destroy the original text altogether. Several attempts are now being placed into position by webmasters in order to prevent this kind of theft and vandalism.